Overview of Artificial Intelligence

AI, which stands for Artificial Intelligence, represents a captivating and multifaceted branch of computer science that continually strives to achieve groundbreaking advancements in creating intelligent machines. These machines possess the remarkable ability to undertake tasks that typically necessitate human intelligence and understanding. These tasks include things like learning, problem-solving, decision-making, and even understanding and processing natural language. With an unwavering focus on expanding the boundaries of human capability, AI aims to develop computer systems that can perceive, analyze, reason, learn, and make decisions autonomously, effectively unlocking a world of unparalleled possibilities. There is a great deal to understand about AI. Like the World Wide Web (WWW), AI is a technology that is going to change everything. The WWW fundamentally changed our relationship with knowledge moving us from a world where knowledge was scarce (but mostly reliable) to one where knowledge was abundant (but largely unreliable).

There are two main types of AI: Narrow AI and General AI. Narrow AI, also known as weak AI, is designed to perform specific tasks within a limited domain. Chatbots and recommendation systems are examples of narrow AI. On the other hand, General AI, also known as strong AI, refers to AI systems that possess human-level intelligence and can perform any intellectual task that a human can do. However, we're not quite there yet and General AI remains more of a goal for the future.

AI systems rely on data and algorithms to learn and make decisions. Machine learning is a popular approach within AI, where algorithms are trained on large datasets to recognize patterns and make predictions or take actions based on that training.

Speed

The training of an AI machine and the training of the mind of advance student of philosophy. That example can be extended more broadly. The formation of mechanical intelligence can be seen as parallel to the process by which the human brain matures biologically from adolescence into Adulthood. Students during the course of their secondary education learn the fundamentals of core subjects. Building up their basic view of the world. That view may not be particularly advanced or always correct; but the same is also true of a machine. Machines like humans learn by absorbing information and transforming it into theory for subsequent practice. When machines learn, an algorithm ingest vast amounts of data. Scrapped from sources on the open Internet or provided more specifically by other private sources and collapses the results into a condensed and compressed mapping of concepts for future use. Just as humans biological mechanisms map sensory input onto neural weights that connect network of the brains processing units, machines similarly require a gradual strengthening of their own computational weights. Neural networks like some high school high school students, can be lazy. During the early stages of training AI will do the bare minimum. Memorizing answers rather than actually learning; a model faced with 2 + 2, might initially encode the answer 4 without having mastered the underlying principles of addition. But rapidly, across a certain threshold this approach will break down forcing the machine to abstract upward as humans do to more universal axioms of knowledge.

This is what principally distinguishes AI from ordinary computers: Its mapping of the world is not programmed, but learned. In traditional software programming a human created algorithm instructs the machine in how to transform a set of inputs into a set of outputs. In machine learning, by contrast, human-created algorithms tell the machine only how to improve itself, allowing the machine to design its own mappings for the input-to-output transformation. As the machine “learns” from countless prior trials, failures, and adjustments, it upgrades its algorithms, iteratively redesigning its internal mappings of the patterns and connections it “sees” in the data.

Periodically, human trainers will give the machine feedback on the accuracy and quality of its outputs. The machine internalizes their corrections by means of “back-propagation,” a technique that allows the effects of the trainers’ changes to ripple backward through the mathematical relationships that the machine has already created, thereby improving the overall model. For any given model, however, humans provide feedback on only a small subset of possible inputs and outputs. After the model performs at a certain level in a number of training tests, its developers trust that its established mappings will generate a safe and accurate response to all inputs, even unexpected ones, with a high probability of success. In each of these ways, AI is already expanding, and will further expand, the realm of human knowledge. But it is doing so—and we are accepting the resultant knowledge as true—by processes we do not fully understand.

Where a typical student graduates from high school in four years, an AI model today can easily finish learning the same amount of knowledge, and dramatically more, in four days. And thus, speed has proven itself to be the first in a handful of core attributes that distinguish AI from our human form and mental capabilities. Despite having highly advanced parallelism—that is, the ability to process simultaneously different kinds of stimuli—the human brain is a slow processor of information, limited by the speed at which our biological circuits work. If a human brain’s circuits were analyzed with the same performance metrics as computers—by “clock rate” or processing speed—the average AI supercomputer is already 120 million times faster than the processing rate of the human brain. True, speed is not a strong indicator of intelligence; very dumb humans can think quickly. Nevertheless, a faster pace of processing provides two benefits as compared with the human brain: the ingestion of vastly more information and the service of many more simultaneous requests. Much of the human brain typically remains on autopilot—passively serving internal needs in guiding the beating of our heart and the movement of our limbs, and intervening to adjust only when the autopilot proves faulty. By contrast, the speed of which AI is capable allows for the programmatic emergence of great prowess, which then enables the achievement of higher, more difficult, and grander problems than those currently solvable by the human brain.

Once both the human and the machine have completed their intellectual training, both are now theoretically capable of “thinking” or, in the equivalent technical term, “inference”. In the course of an interview, an argument, or a date, a student-turned-graduate draws upon his or her education and experience. We do this not by regurgitating exact formulas, individual facts, and precise numbers from memory but by consulting a thinner layer of contemplation of and reflection on what we have learned. The human brain was never meant to memorize information for perfect recall; nor are most brains capable of doing so. What should remain instead, after innumerable lessons, essays, and exams, is a grasp of the deeper and longer-lasting concepts that those same educational tools of instruction are meant to reveal: the wonder of astronomy, the tragedy of ambition, the necessity (or not) of revolution. The same is true of AI. When a model emerges from the completion of its training run, it no longer requires access to the original data it was trained on. Rather, it is left only with a rough guiding intuition, assembled from the knowledge it has received, for answering questions, challenging reasoning, and making predictions. Just as humans don’t haul libraries of material around with them, an AI model similarly infers rather than recalls. The difference, then, is that superior speed facilitates this inference across a broader, deeper array of learned information than a human could ever hope to attain. To do this, even to answer a simple question, an AI model may perform billions of complex technical operations. Whereas a traditional computer simply retrieves specific information stored in its memory—since it is unable to arrive at the sorts of conclusions that don’t already exist there—AI launches computing in the direction of the human brain. Just as humans learn in order to think, machines train in order to infer. The second cannot come without the first.

The first phase—for both humans and machines—is the more intensive process, in both the amount of time spent and the number of resources required. A postdoctoral student may have spent two decades or more building the capability to compose—in two days—a thoughtful essay on a given subject. Similarly, training the largest AI models may take months, but the resultant inferencing can consume mere fractions of a second.

Today’s AI systems already give apparently cogent and considered answers in response to human queries. In their latest and future iterations, they will operate comprehensively, crossing multiple domains of knowledge with an agility that exceeds the capacity of any human or any group of humans. For AIs, scale—in the sense of size—enables speed; as we have just seen, the larger and more thoroughly trained the machine, the faster and more exhaustive its results. What is more, by recognizing patterns in the data that elude the inquiring human operator, AI systems will be equipped to distill traditional expressions of knowledge into original responses and, out of enormous amounts of data, forge new conceptual truths. Which raises a question, or rather more than one question.

Opacity

How do we know what we know about the workings of our universe? And how do we know that what we know is true?

In most areas of knowledge, ever since the advent of the scientific method, with its insistence on experiment as the criterion of proof, any information that is not supported by evidence has been regarded as incomplete and untrustworthy.

Only transparency, reproducibility, and logical validation confer legitimacy on a claim of truth. Under the influence of this framework, recent centuries have yielded a huge expansion in human knowledge, human understanding, and human productivity—culminating with the invention of the computer and machine learning.

Today, however, in the age of AI, we face a new and peculiarly daunting challenge: information without explanation. Already, AI’s responses—which, as noted above, can take the form of highly articulate and coherent descriptions of complex concepts—arrive instantaneously. The machines’ outputs are delivered bare and unqualified, with no apparent bias or motive—but also unaccompanied by any citation of sources or other justifications. And yet, despite this lack of a rationale for any given answer, early AI systems have already engendered incredible levels of human confidence in, and reliance upon, their otherwise unexplained and seemingly oracular pronouncements. As they advance, these new “brains” could appear to be not only authoritative but infallible.

Although human feedback helps an AI machine refine its internal algorithms, the machine holds primary responsibility for detecting patterns in, and assigning weights to, the data on which it is trained. Nor, once a model is trained, does it publish the internal mathematical schema that it has concocted. As a result, the representations of reality that the machine generates remain largely opaque, even to its inventors. Today, humans attempt to assure themselves of the integrity of these machine models mainly by examining outputs alone. The internal workings remain largely impenetrable—hence the reference to some AI systems as “black boxes.” Although some researchers are attempting to reverse-engineer the outputs of these complex models into familiar algorithms, it is not yet clear whether they will succeed.

In brief, models trained via machine learning allow humans to know new things (the models’ outputs) but not to understand how the discoveries were made (the models’ internal processes). This separates human knowledge from human understanding in a way that would have been foreign to any other age of humanity. Human apperception in the modern sense has developed from the intuitions and outcomes that follow from conscious subjective experience, individual examination of logic, and the ability to reproduce the results. These methods of knowledge derive in turn from a quintessentially humanist impulse: “If I can’t do it, then I can’t understand it; if I can’t understand it, then I can’t know it to be true.”

In the framework that emerged in the Age of Enlightenment, these core elements—individual human capacity, subjective comprehension, and objective truth—all moved in tandem. By contrast, the truths produced by AI are manufactured by processes that humans cannot replicate. Machine reasoning, which does not proceed via human methods, is beyond human subjective experience and outside the capacity of humans, who cannot even fully represent the machines’ internal processes.

them from countless other trails, failure After the model performs at a certain level after several training test. That is the ability to The speed at which AI is capable The human brain was never

When a model emerges from the completion of its training run, it no longer requires access to the original data it was trained on. It's left with rather it left with only a rough Whereas a simple computer simply retrieve information stored in its memory. AI launches computing in the direction of the human brain. Just as humans learn in order to think, machine train in order to infer. The second cannot come without the first.

There are several exciting applications of AI across various fields. For example, AI is used in autonomous vehicles, healthcare for diagnosis and treatment, finance for fraud detection, and even in entertainment for creating realistic virtual characters.

It's important to note that AI is not just about replacing humans, but rather augmenting our abilities and making our lives easier. It has the potential to revolutionize industries and solve complex problems in ways that were previously unimaginable.

Kissinger, Henry A.; Schmidt, Eric; Mundie, Craig. Genesis: Artificial Intelligence, Hope, and the Human Spirit. Little, Brown and Company.

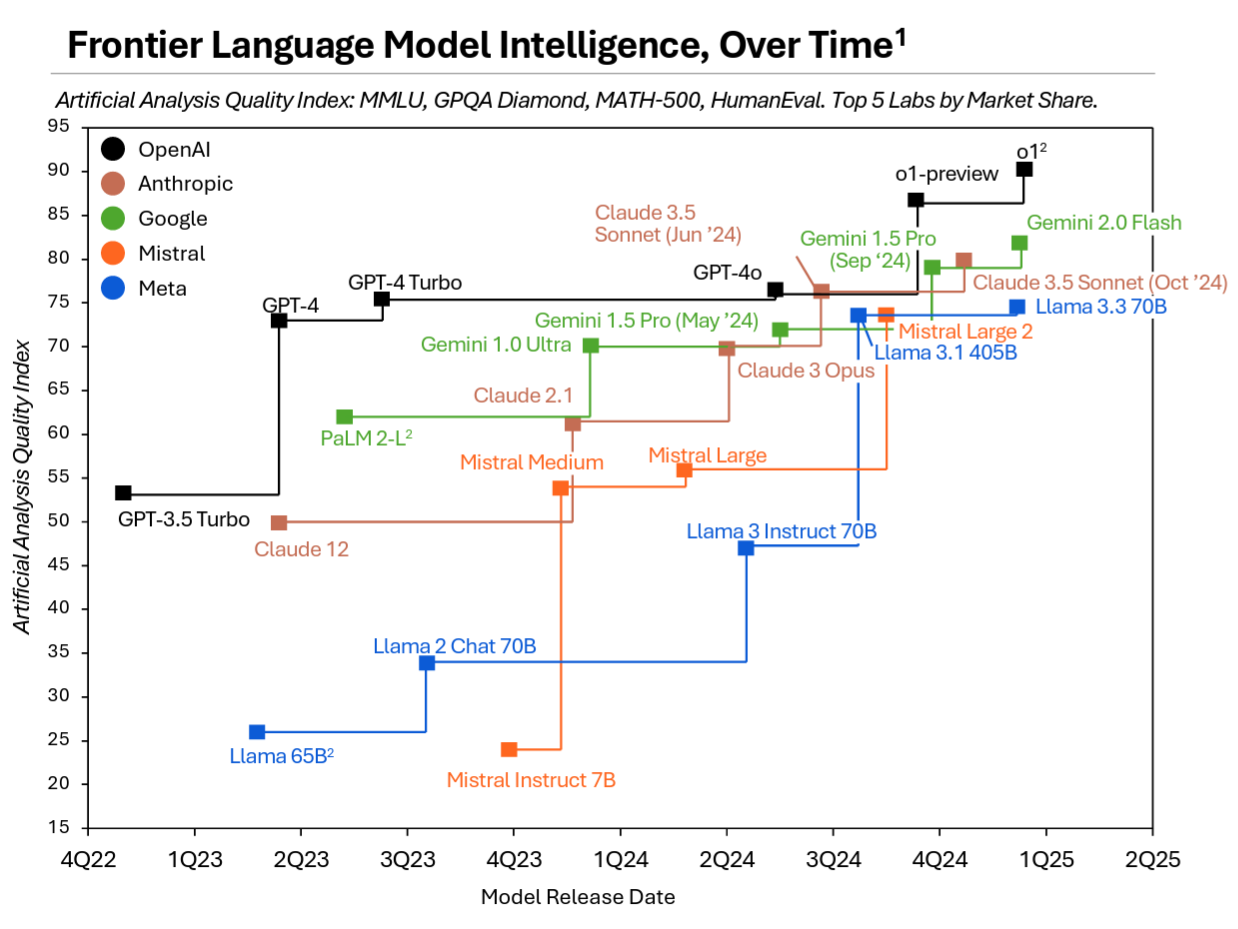

AI Intelligence Over Time

AI Intelligence over time chart. Click image for larger view. Image credit: Artificial Analysis

AI Intelligence over time chart. Click image for larger view. Image credit: Artificial Analysis

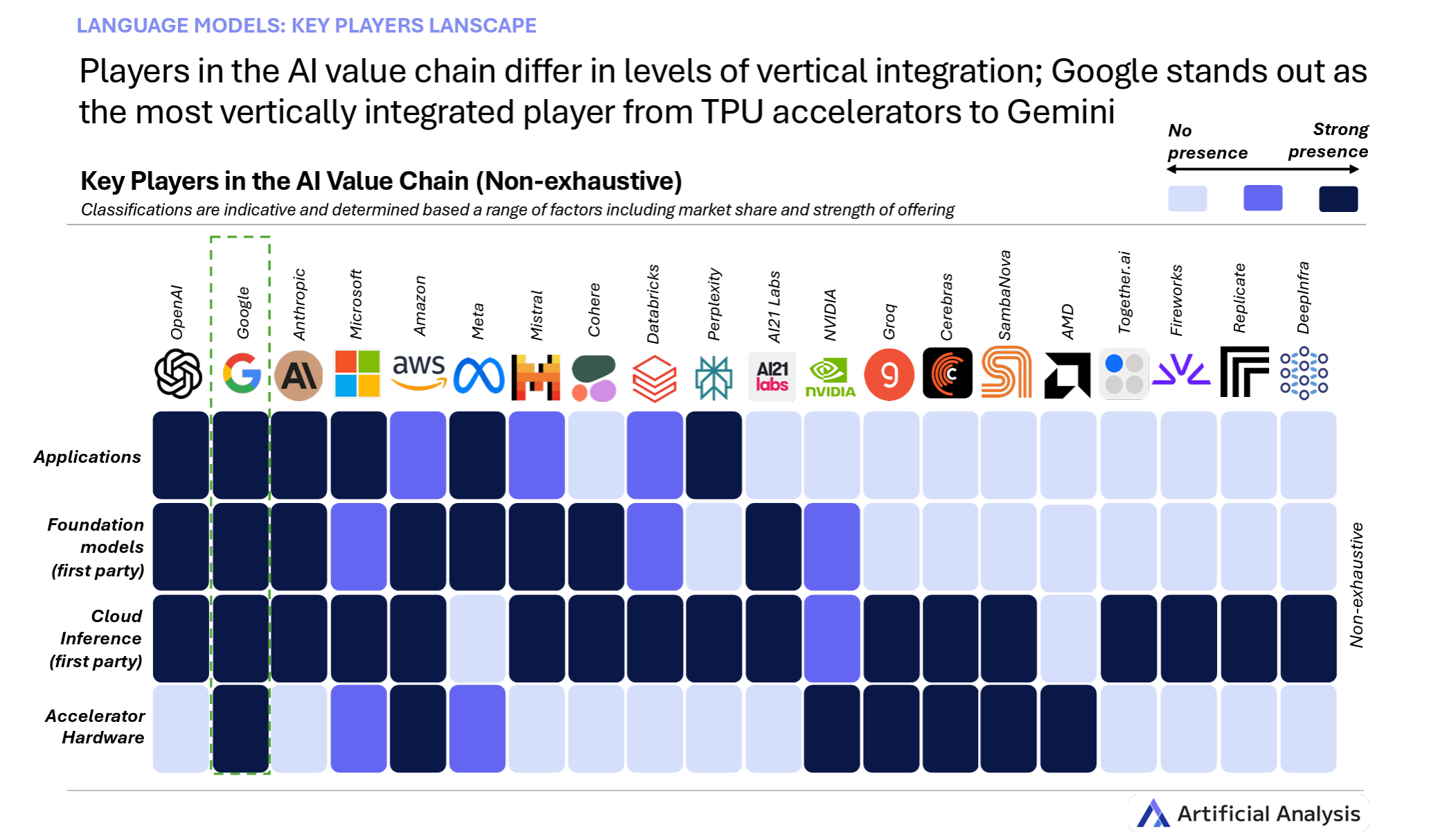

Language Models Key Players Landscape

Language Models Key Players Landscape. Click image for larger view. Image credit: Artificial Analysis

Language Models Key Players Landscape. Click image for larger view. Image credit: Artificial Analysis

Artificial Analysis Intelligence Index

Click image for larger view. Image credit: Artificial Analysis

Click image for larger view. Image credit: Artificial Analysis

Roadmap To Become An AI Generalist

Click image for a larger view.

Click image for a larger view.

AI Links of Interest

AI Projects